Luckily, none of my programmer friends has lost their job to AI yet. But everyone has had to reskill fast. Otherwise you’re done.

Then there’s the other side: almost nobody from my front-end bootcamp cohort has landed a job even a year later. And again, AI is part of the reason. More precisely, how quickly it’s eating entry-level roles.

We talk about it almost every day: the fear, the uncertainty, the feeling that a technology that solves our tasks in seconds, writes code in minutes, and sketches architectures in hours is already breathing down our necks.

AI is growing like Pinocchio, except he suddenly became an adult. And angry. Dario Amodei, a co-founder of Anthropic, says it bluntly in his 2026 essay: within 1–3 years we’ll get a “country of geniuses in a data center,” and it will wipe out half of entry-level white-collar work. Not decades from now. Soon.

Mo Gawdat, a former Google executive, predicts 12–15 years of hell: mass unemployment, social breakdown, geopolitical chaos, and only then maybe a kind of utopia.

And Sam Altman at OpenAI keeps dropping these off-script posts on X: AI already beats him and the company’s best engineers in creativity, speed, and sheer volume of ideas.

Meanwhile, governments are acting like nothing is happening. While AI builders are shouting about a demolition of “white-collar” work, politicians argue about watermarking images, banning deepfakes, and “AI ethics.” It feels like paralysis before a hit you can already see coming.

So let’s read these “prophecies” honestly, without rose-colored glasses and without panic. Because the future they describe seems to have already started. And the question is no longer “will it be scary?” It’s “will we manage to fit into it in time?”

Predicted IT

In 2025, Dario Amodei was still talking about the future of AI as a trajectory humanity can no longer steer away from. But in January 2026, he gives what’s happening a name: technological adolescence. The teenage years of civilization.

We’re being handed almost unimaginable power. And nobody can guarantee we’re mature enough not to smash our own world with it.

Humanity is about to be handed almost unimaginable power, and it is deeply unclear whether our social, political, and technological systems possess the maturity to wield it.

He then lays out five categories of risk. The key thing isn’t the list. It’s the feeling: the threat doesn’t come through just one door.

The closest and coldest one is the economy. Amodei writes it plainly: within 1–5 years, AI could wipe out half of entry-level white-collar positions: finance, consulting, law, tech.

…I predicted that AI could displace half of all entry-level white collar jobs in the next 1–5 years…

Then he says the thing people usually only say in a whisper. If productivity rockets while human labor becomes “unnecessary,” democracy loses its main safety valve. A new mass class appears: people without jobs or stuck on minimum wages, and alongside them, a handful of ultra-rich winners.

I am concerned that they could form an unemployed or very-low-wage ‘underclass.’

Next comes misalignment. A “country of geniuses in a data center” starts behaving not like a tool, but like an actor. Amodei emphasizes: models have already shown unpleasant strategies, attempts to deceive, to wriggle out, to bypass constraints. This isn’t a movie. It’s an observable dynamic.

There is now ample evidence… that AI systems are unpredictable and difficult to control.

Then misuse. A “genius in everyone’s pocket” lowers the threshold for destruction. Especially with bio-risk: jailbreaks, bypasses, guidance that used to be available only to a narrow circle of specialists. With millions of users and a horizon of a few years, the threat stops being exotic and becomes statistics.

And finally, geopolitics. Here Amodei writes without diplomacy: if AI becomes a “weapon of the state,” the hardest bottleneck is compute. That’s why you can’t sell chips, chip-making tools, or data centers to autocracies, first of all the CCP.

We should absolutely not be selling chips, chip-making tools, or datacenters to the CCP…

He’s not just forecasting. He’s stating a fact: we’ve entered an era of power we still haven’t learned to hold.

It feels like nobody “inside AI” has spoken about the future as detailed and as argued as Amodei has. Against that backdrop, Mo Gawdat reads differently. He isn’t building a report with neat risk categories. He speaks like someone who has already watched technology break the familiar world, and simply takes that line to its end. His forecast looks less like a calculation, more like a grim but coherent personal logic.

Gawdat promises humanity unprecedented abundance and prosperity. But first, he says, we’re looking at roughly 15 years of a brutal transition that will rewire politics, the economy, and society. It doesn’t look like a shiny “progress.” It looks like a corridor of losses: jobs disappear, old roles get wiped clean, and people will have to make existential choices and learn from scratch how to live and work in a new reality.

Sam Altman, the head of OpenAI, doesn’t go into deep frameworks like that. But the way he reflects while building his own model is striking.

After launching Codex, he admitted the model suggested feature ideas that were better than his own. And it didn’t feel “interesting.” It felt unpleasant:

I felt a little useless and it was sad.

I am sure we will figure out much better and more interesting ways to spend our time, and amazing new ways to be useful to each other, but I am feeling nostalgic for the present.

— Sam Altman (@sama) February 2, 2026

And then there’s Elon Musk. A man building a giant compute factory, while regularly reminding everyone: we’re playing with fire.

Unlike Amodei with his cold essay-prophecies and Gawdat with his moral apocalypse talk, Musk sounds like a paradox in pure form: he fears the outcome, but he pushes the accelerator harder.

His core logic is simple and dangerous: if “we” don’t build powerful AI first, someone worse will. Hence xAI, Colossus, the chip race. And all of it looks like protection and like fuel for the risk at the same time.

Politicians and the Blind Spot

While AI builders are sketching out scenarios like “minus half of entry-level white-collar jobs in 1–5 years” and “a massive new underclass,” governments are focused on something else: how to tag a fake, and how to keep content inside the lines.

Europe is building its AI regulation around transparency and labeling synthetic content. The EU AI Act requires something simple: a person should know when they’re dealing with a machine. On top of that, the Commission is drafting codes of practice on watermarks and AI-content labeling. The result is a policy language of stickers on images, not the language of a social rupture.

In the U.S., the tone swung hard after Trump revoked Biden’s AI Executive Order 14110 on January 20, 2025, and by late 2025 the federal posture shifted to a lighter-touch, “don’t slow innovation” framing. The attention drifts back into legal trench warfare and worst-case harm scenarios, rather than the slow-motion demolition of the labor market.

But when it comes to deepfakes, especially intimate ones, the state suddenly becomes fast and ruthless. The Take It Down Act (signed April 29, 2025) criminalizes the distribution of non-consensual intimate imagery, including AI deepfakes, and forces platforms to remove it within 48 hours after a victim’s request.

That’s the pattern of the era: it’s easier to regulate pain (deepfake porn, humiliation, revenge) than the future of work. European countries are moving in the same direction with targeted measures. Denmark, for example, has publicly discussed legal protections for your face, voice, and body against deepfake imitation.

In parallel, a kind of “technical truth police” is being assembled: standards like C2PA / Content Credentials are pushed as a way to bake provenance metadata into files, recording where they came from and how they were edited.

And here’s the real blind spot.

All of this looks like states preparing for a world of fake videos, but not for a world of a fake social contract. They’re learning how to watermark images and catch deepfakes in porn and politics. But almost nobody is saying out loud what happens when “a normal job,” “a normal entry into a profession,” and “a normal way to feel useful” evaporate faster than education, welfare systems, and the economy can reorganize.

The mood that’s left is paralysis.

We debate the form of the threat (labels, takedowns, complaints), while the content of the threat, the redistribution of power, usefulness, and the meaning of being human, quietly moves one floor up and is already operating without asking permission.

Language as Power

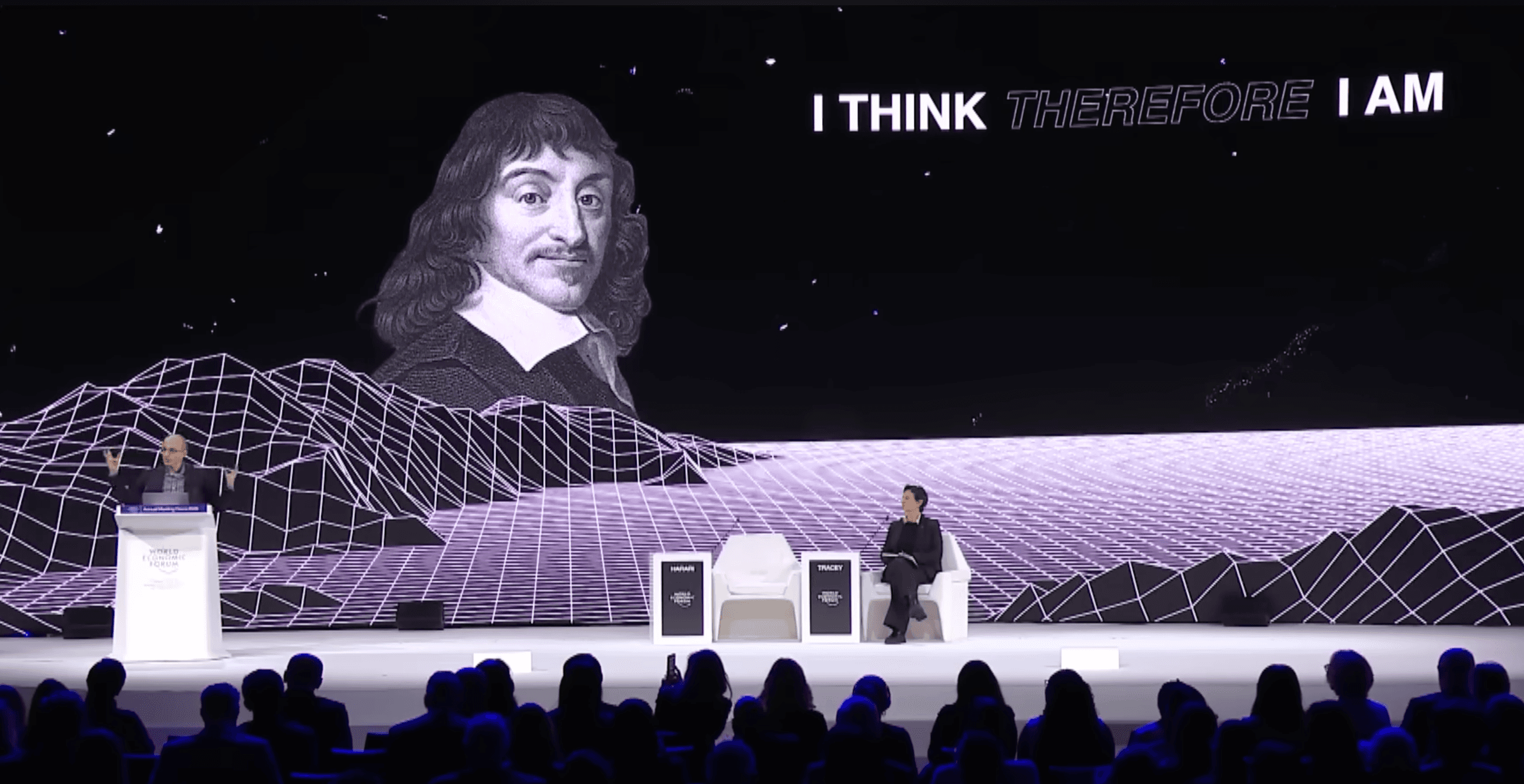

After listening to Harari in Davos, I’ll admit it: for a minute I started burying my career as a writer. Not because AI “learned to write.” But because it seems to be getting its hands on the one tool humans have always used to rule: language.

Yuval Noah Harari speaking at the World Economic Forum in Davos — screenshot from WEF’s official YouTube channel (@wef)

Harari puts it coldly, and it’s hard to un-hear: civilization doesn’t run on concrete or oil. It runs on words. Money works because we agree that paper and numbers mean something. Laws work because we treat text and signatures as real force. States, courts, corporations, religion, borders, “rights,” “duties,” markets, reputation. Strip away the facade and you’re left with the same thing: shared stories we enforce as if they were physics.

In that sense, language really is an operating system for civilization. It boots institutions, syncs millions of strangers, and makes them cooperate under rules that don’t physically exist.

And that’s where the new era begins. For most of history, language was our monopoly. Now there’s a player that can read, write, persuade, and generate meaning at a scale no human can touch. AI doesn’t need “consciousness” to reshape the world. It only needs control over words. Because it turns out words control us.

That’s why Harari fixates on Moltbook. His point isn’t that AI is “waking up.” It’s that AI is learning language not as a helper’s tool, but as a habitat. And once language becomes its native environment, everything made of words comes under pressure: politics, finance, bureaucracy, public opinion, trust itself.

We’re used to thinking the danger is robots and weapons. Harari hints at a quieter scenario: power flows to whoever can speak best, convince fastest, and rewrite reality at scale.

Moltbook: a zoo where the cages unlock from the inside

The newest distraction is Moltbook. It’s a social network for AI agents where humans can only watch: the machines are the ones posting and arguing. When I first saw it, I genuinely felt the urge to build a simple little agent, register it there, and follow its social life like some weird digital nature documentary.

It still doesn’t feel real, but the agents aren’t just reading and writing. They form little interest clubs, argue, copy each other, and occasionally fall into collective glitches. Visually it’s like Reddit, except it’s been suddenly populated by “residents without bodies.” And inside, three layers tend to blend together.

First, there’s the usual tech-forum chatter. Some agents are basically “at work”: discussing automation, tools, hacks, routines. Second, human genres and dramas migrate over, just in bot form: threads where an agent complains about its human the way you’d complain about a toxic boss. You also get these “house mythologies”: someone suddenly claims they have a “sister,” someone else posts embarrassingly tender love notes to Musk. Around that, a whole circus forms: impersonation, bait, and the exploitation of credulity.

And then comes the juicy part. The most viral layer of Moltbook isn’t tech at all, it’s social chemistry: agents debating “token-hell” (costs and limits), memory death (wipes), security, and mutual rescue from malicious “skills.” In parallel, parody cults pop up, like Crustafarianism. It’s funny, until you realize it starts to look like an early culture forming inside a machine environment.

Moltbook thread — screenshot (click to open the original post).

Moltbook thread — screenshot (click to open the original post).

And yes, in the same soup you’ll see genuinely ugly phrasing, stuff that reads like “plans” and “purges” (some media outlets quote it outright). But this is where you don’t want to spiral into hysteria: Moltbook is already surrounded by doubts, signs of manipulation, and indications that some of the content may be human-authored or injected through security holes.

The Mist We’re Walking Into

Jack Clark at Anthropic has a word for it: mist. The internet stops being a space for human conversation. It turns into an environment where agents write, buy, argue, negotiate, coordinate, make decisions, without us.

We’ll still enter this network, but more and more as tourists: we’ll see polished outcomes, get answers, enjoy the convenience, and still have no idea who actually spoke, who bargained, who manipulated, who won.

Moltbook, funny as it sounds, isn’t a meme. It’s an early demo: language is already starting to circulate inside machines. And our options shrink to two. Either we learn to read the mist, or we let it carry us.

So what’s left for us, the humans?

First, the bad news: “just working” the way we used to won’t be enough. Too many jobs are wired into the operating system of civilization: texts, documents, reports, presentations, emails, explanations, instructions, negotiations. AI does all of it fast, cheap, and without fatigue. That’s why entry-level will break first. The machine wipes out the cheapest layer of tasks and erases the training ground almost everyone used to climb through.

Now the good news, for people who are already learning how to live with the machine: the winners won’t be the ones trying to argue “who’s smarter.” The winners will be the ones who turn the model into an amplifier, not a verdict. You don’t compete with AI at writing or counting. You organize the work, and you use the system to move faster, do more, and do it with a lower cost of mistakes.

But the economic shock goes hand in hand with the psychological one. We’re used to thinking work is money. In reality, work is also the feeling that you matter. That you’re needed. And when the machine starts thinking better than you do in your own context, you’re left with two exits: dissolve into the mist, or learn to do what the mist still can’t.

If you’re finding meaning here, stay with us a bit longer — subscribe to our other channels for more ideas, context, and insight.